一,概述

当我们访问数据库的表时,需要从系统表中获取一些表的相关信息。这种动作是非常频繁的,为了提高获取这些信息的效率,PostgreSQL设定了两个Cache——SysCache和RelCache。SysCache中存放的是最近使用过的系统表的元组,而RelCache中存放的是最近访问过的表的模式信息(包含系统表的信息)。简单来说,SysCache存放的是每个系统表最近使用过的元组,RelCache存放的是最近使用过的表的RelationData结构(RelCache部分会讲这个结构)。当我们打开一个表时,就会创建一个RelationData结构,这个结构会包含这个表的所有信息(这些信息都是从系统表中查到的)。

二,SysCache

1. SysCache的内部组织结构

从实现上看SysCache就是一个数组,数组长度为预定义的系统表的个数。数组的每个元素为CatCache类型,该结构体内使用Hash来存储被缓存的系统表元组,每一个系统表唯一的对应一个SysCache数组中的CatCache结构。每个CatCache都有若干个(最多不超过4个)查找关键字,这些关键字及其组合可以用来再CatCache中查找系统表元组,在initdb的时候会在这些关键字上为系统表创建索引。

系统表的信息在一个静态数组cacheinfo里面存着,这个数组的元素类型为cachedesc:

/*

* struct cachedesc: information defining a single syscache

*/

struct cachedesc

{

Oid reloid; /* OID of the relation being cached */

Oid indoid; /* OID of index relation for this cache */

int nkeys; /* # of keys needed for cache lookup */

int key[4]; /* attribute numbers of key attrs */

int nbuckets; /* number of hash buckets for this cache */

};static const struct cachedesc cacheinfo[] = {

{AggregateRelationId, /* AGGFNOID */

AggregateFnoidIndexId,

1,

{

Anum_pg_aggregate_aggfnoid,

0,

0,

0

},

32

},

{AccessMethodRelationId, /* AMNAME */

AmNameIndexId,

1,

{

Anum_pg_am_amname,

0,

0,

0

},

4

},

/* 中间内容省略 */

{UserMappingRelationId, /* USERMAPPINGUSERSERVER */

UserMappingUserServerIndexId,

2,

{

Anum_pg_user_mapping_umuser,

Anum_pg_user_mapping_umserver,

0,

0

},

128

}

};SysCache数组的大小(用SysCacheSize表示)就是这个静态数组的大小,也即系统表的个数:

static CatCache *SysCache[lengthof(cacheinfo)];

static int SysCacheSize = lengthof(cacheinfo);然后我们再看一下CatCache结构:

#define CATCACHE_MAXKEYS 4

typedef struct catcache

{

int id; /* cache identifier --- see syscache.h */

struct catcache *cc_next; /* link to next catcache */

const char *cc_relname; /* name of relation the tuples come from */

Oid cc_reloid; /* OID of relation the tuples come from */

Oid cc_indexoid; /* OID of index matching cache keys */

bool cc_relisshared; /* is relation shared across databases? */

TupleDesc cc_tupdesc; /* tuple descriptor (copied from reldesc) */

int cc_ntup; /* # of tuples currently in this cache */

int cc_nbuckets; /* # of hash buckets in this cache */

int cc_nkeys; /* # of keys (1..CATCACHE_MAXKEYS) */

int cc_key[CATCACHE_MAXKEYS]; /* AttrNumber of each key */

PGFunction cc_hashfunc[CATCACHE_MAXKEYS]; /* hash function for each key */

ScanKeyData cc_skey[CATCACHE_MAXKEYS]; /* precomputed key info for

* heap scans */

bool cc_isname[CATCACHE_MAXKEYS]; /* flag "name" key columns */

Dllist cc_lists; /* list of CatCList structs */

#ifdef CATCACHE_STATS

long cc_searches; /* total # searches against this cache */

long cc_hits; /* # of matches against existing entry */

long cc_neg_hits; /* # of matches against negative entry */

long cc_newloads; /* # of successful loads of new entry */

/*

* cc_searches - (cc_hits + cc_neg_hits + cc_newloads) is number of failed

* searches, each of which will result in loading a negative entry

*/

long cc_invals; /* # of entries invalidated from cache */

long cc_lsearches; /* total # list-searches */

long cc_lhits; /* # of matches against existing lists */

#endif

Dllist cc_bucket[1]; /* hash buckets --- VARIABLE LENGTH ARRAY */

} CatCache;其实SysCache内部的组织结构也主要就是CatCache内部的组织结构。我们先来张图,然后再讲解:

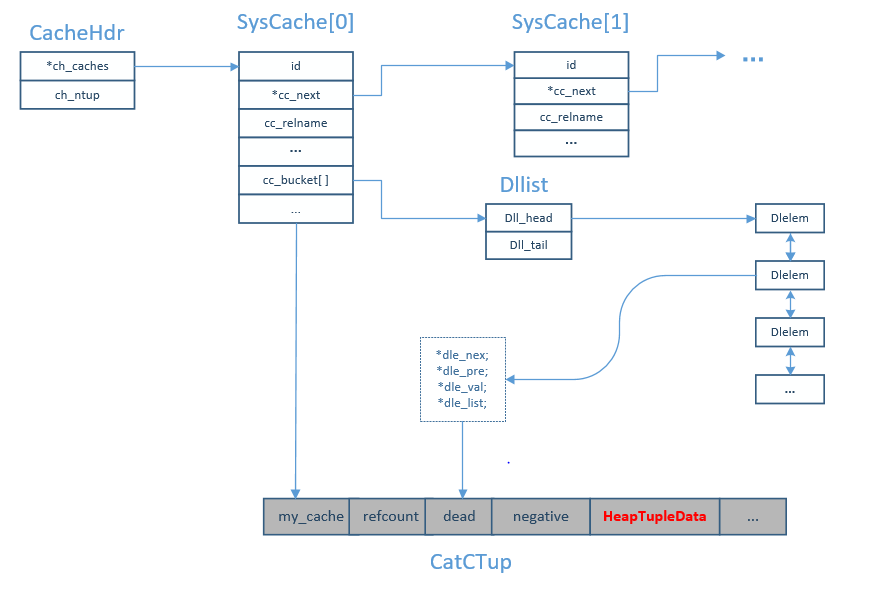

(1)SysCache数组里面的元素(都是CatCache结构)之间使用CatCache的cc_next字段连成了一个单向链表,链表的头部用全局变量CacheHdr记录,其数据结构如下:

typedef struct catcacheheader

{

CatCache *ch_caches; /* head of list of CatCache structs */

int ch_ntup; /* # of tuples in all caches */

} CatCacheHeader;

/* Cache management header --- pointer is NULL until created */

static CatCacheHeader *CacheHdr = NULL;(2)每个CatCache的cc_bucket数组中的每一个元素都表示一个Hash桶,元组的键值通过Hash函数可以映射到cc_bucket数组的下标。每一个Hash桶都被组织成一个双向链表(Dllist结构),其中的节点为Dlelem类型,Dlelem是一个包装过的缓存元组,其dle_val字段指向一个CatCTup形式的缓存元组:

typedef struct Dlelem

{

struct Dlelem *dle_next; /* next element */

struct Dlelem *dle_prev; /* previous element */

void *dle_val; /* value of the element */

struct Dllist *dle_list; /* what list this element is in */

} Dlelem;

typedef struct Dllist

{

Dlelem *dll_head;

Dlelem *dll_tail;

} Dllist;

typedef struct catctup

{

int ct_magic; /* for identifying CatCTup entries */

#define CT_MAGIC 0x57261502

CatCache *my_cache; /* link to owning catcache */

/*

* Each tuple in a cache is a member of a Dllist that stores the elements

* of its hash bucket. We keep each Dllist in LRU order to speed repeated

* lookups.

*/

Dlelem cache_elem; /* list member of per-bucket list */

/*

* The tuple may also be a member of at most one CatCList. (If a single

* catcache is list-searched with varying numbers of keys, we may have to

* make multiple entries for the same tuple because of this restriction.

* Currently, that's not expected to be common, so we accept the potential

* inefficiency.)

*/

struct catclist *c_list; /* containing CatCList, or NULL if none */

/*

* A tuple marked "dead" must not be returned by subsequent searches.

* However, it won't be physically deleted from the cache until its

* refcount goes to zero. (If it's a member of a CatCList, the list's

* refcount must go to zero, too; also, remember to mark the list dead at

* the same time the tuple is marked.)

*

* A negative cache entry is an assertion that there is no tuple matching

* a particular key. This is just as useful as a normal entry so far as

* avoiding catalog searches is concerned. Management of positive and

* negative entries is identical.

*/

int refcount; /* number of active references */

bool dead; /* dead but not yet removed? */

bool negative; /* negative cache entry? */

uint32 hash_value; /* hash value for this tuple's keys */

HeapTupleData tuple; /* tuple management header */

} CatCTup;具有同一Hash键值的元组被缓存在同一个Hash桶中,每一个Hash桶中的缓存元组都被先包装成Dlelem结构并链接成一个链表。因此在查找某一个元组时,需要先计算其Hash键值并通过键值找到其所在的Hash桶,之后要遍历Hash桶的链表逐一对比缓存元组。为了尽量减少遍历Hash桶的代价,在组织Hash桶中的链表时,会将这一次命中的缓存元组移动到链表的头部,这样下一次查找同一个元组时可以在尽可能少的时间内命中。Dlelem结构中的dle_list字段用来记录缓存元组所在链表的头部,以方便将该缓存元组移动到链表的头部。

(3)CatCache中缓存的元组将先被包装成CatCTup形式,然后加入到其所在Hash桶的链表中。在CatCTup中通过my_cache和cache_elem分别指向缓存元组所在的CatCache及Hash桶链表中的节点。一个被标记为“死亡”的CatCTup(dead字段为真)并不会实际从CatCache中删除,但是在后续的查找中它不会被返回。“死亡”的缓存元组将一直被保留在CatCache中,直到没有人访问它,即其refcount变为0.但如果“死亡”元组同时也属于一个CatCList,则必须等到CatCList和CatCTup的refcount都变为0时才能将其从CatCache中清除。CatCTup的negative字段表明该缓存元组是否为一个“负元组”,所谓负元组就是实际并不存在于系统表中,但是其键值曾经用于在CatCache中进行查找的元组。负元组只有键值,其他属性为空。负元组的存在是为了避免反复到物理表中去查找不存在的元组所带来的I/O开销,具体在后面SysCache的查找中介绍。

typedef struct catclist

{

int cl_magic; /* for identifying CatCList entries */

#define CL_MAGIC 0x52765103

CatCache *my_cache; /* link to owning catcache */

/*

* A CatCList describes the result of a partial search, ie, a search using

* only the first K key columns of an N-key cache. We form the keys used

* into a tuple (with other attributes NULL) to represent the stored key

* set. The CatCList object contains links to cache entries for all the

* table rows satisfying the partial key. (Note: none of these will be

* negative cache entries.)

*

* A CatCList is only a member of a per-cache list; we do not currently

* divide them into hash buckets.

*

* A list marked "dead" must not be returned by subsequent searches.

* However, it won't be physically deleted from the cache until its

* refcount goes to zero. (A list should be marked dead if any of its

* member entries are dead.)

*

* If "ordered" is true then the member tuples appear in the order of the

* cache's underlying index. This will be true in normal operation, but

* might not be true during bootstrap or recovery operations. (namespace.c

* is able to save some cycles when it is true.)

*/

Dlelem cache_elem; /* list member of per-catcache list */

int refcount; /* number of active references */

bool dead; /* dead but not yet removed? */

bool ordered; /* members listed in index order? */

short nkeys; /* number of lookup keys specified */

uint32 hash_value; /* hash value for lookup keys */

HeapTupleData tuple; /* header for tuple holding keys */

int n_members; /* number of member tuples */

CatCTup *members[1]; /* members --- VARIABLE LENGTH ARRAY */

} CatCList; /* VARIABLE LENGTH STRUCT */2. SysCache的初始化

在后台进程的主函数PostgresMain里面,调用InitPostgres进行一些初始化的时候,会调用InitCatalogCache函数对SysCache数组进程初始化,并建立由CacheHdr记录的CatCache链表。整个初始化的过程实际上就是填充SysCache数组中每个元素的CatCache结构的过程,填充需要的信息存在之前介绍的静态数组cacheinfo里面。

后面还会在初始化RelCache第三阶段函数RelationCacheInitializePhase3中调用InitCatalogCachePhase2函数对SysCache做第二阶段的初始化。这个阶段的初始化依旧是完善SysCache数组中的CatCache结构,主要是根据对应的系统表字段填充CatCache结构中的c_tupdesc、cc_relname、cc_hashfunc、cc_isname、cc_skey等字段。

SysCache数组初始化完成之后,CatCache内是没有任何元组的,但是随着系统运行时对于系统表的访问,CatCache中的系统表元组会逐渐增多。

初始化部分的代码虽然比较多,但都比较简单,这里就不细讲也不贴代码了。

3. SysCache中元组的查找

更准确的应该说是CatCache中元组的查找。在CatCache中查找元组有两种方式:精确匹配和部分匹配。前者用于给定CatCache所需的所有键值,并返回CatCache中能完全匹配这个键值的元组;而后者只需要给出部分键值,并将部分匹配的元组以一个CatCList的方式返回。

精确匹配查找由函数SearchCatCache函数实现:

/*

* SearchCatCache

*

* This call searches a system cache for a tuple, opening the relation

* if necessary (on the first access to a particular cache).

*

* The result is NULL if not found, or a pointer to a HeapTuple in

* the cache. The caller must not modify the tuple, and must call

* ReleaseCatCache() when done with it.

*

* The search key values should be expressed as Datums of the key columns'

* datatype(s). (Pass zeroes for any unused parameters.) As a special

* exception, the passed-in key for a NAME column can be just a C string;

* the caller need not go to the trouble of converting it to a fully

* null-padded NAME.

*/

HeapTuple

SearchCatCache(CatCache *cache,

Datum v1,

Datum v2,

Datum v3,

Datum v4)

{

ScanKeyData cur_skey[CATCACHE_MAXKEYS];

uint32 hashValue;

Index hashIndex;

Dlelem *elt;

CatCTup *ct;

Relation relation;

SysScanDesc scandesc;

HeapTuple ntp;

/*

* one-time startup overhead for each cache

*/

if (cache->cc_tupdesc == NULL)

CatalogCacheInitializeCache(cache);

#ifdef CATCACHE_STATS

cache->cc_searches++;

#endif

/*

* initialize the search key information

*/

memcpy(cur_skey, cache->cc_skey, sizeof(cur_skey));

cur_skey[0].sk_argument = v1;

cur_skey[1].sk_argument = v2;

cur_skey[2].sk_argument = v3;

cur_skey[3].sk_argument = v4;

/*

* find the hash bucket in which to look for the tuple

*/

hashValue = CatalogCacheComputeHashValue(cache, cache->cc_nkeys, cur_skey);

hashIndex = HASH_INDEX(hashValue, cache->cc_nbuckets);

/*

* scan the hash bucket until we find a match or exhaust our tuples

*/

for (elt = DLGetHead(&cache->cc_bucket[hashIndex]);

elt;

elt = DLGetSucc(elt))

{

bool res;

ct = (CatCTup *) DLE_VAL(elt);

if (ct->dead)

continue; /* ignore dead entries */

if (ct->hash_value != hashValue)

continue; /* quickly skip entry if wrong hash val */

/*

* see if the cached tuple matches our key.

*/

HeapKeyTest(&ct->tuple,

cache->cc_tupdesc,

cache->cc_nkeys,

cur_skey,

res);

if (!res)

continue;

/*

* We found a match in the cache. Move it to the front of the list

* for its hashbucket, in order to speed subsequent searches. (The

* most frequently accessed elements in any hashbucket will tend to be

* near the front of the hashbucket's list.)

*/

DLMoveToFront(&ct->cache_elem);

/*

* If it's a positive entry, bump its refcount and return it. If it's

* negative, we can report failure to the caller.

*/

if (!ct->negative)

{

ResourceOwnerEnlargeCatCacheRefs(CurrentResourceOwner);

ct->refcount++;

ResourceOwnerRememberCatCacheRef(CurrentResourceOwner, &ct->tuple);

CACHE3_elog(DEBUG2, "SearchCatCache(%s): found in bucket %d",

cache->cc_relname, hashIndex);

#ifdef CATCACHE_STATS

cache->cc_hits++;

#endif

return &ct->tuple;

}

else

{

CACHE3_elog(DEBUG2, "SearchCatCache(%s): found neg entry in bucket %d",

cache->cc_relname, hashIndex);

#ifdef CATCACHE_STATS

cache->cc_neg_hits++;

#endif

return NULL;

}

}

/*

* Tuple was not found in cache, so we have to try to retrieve it directly

* from the relation. If found, we will add it to the cache; if not

* found, we will add a negative cache entry instead.

*

* NOTE: it is possible for recursive cache lookups to occur while reading

* the relation --- for example, due to shared-cache-inval messages being

* processed during heap_open(). This is OK. It's even possible for one

* of those lookups to find and enter the very same tuple we are trying to

* fetch here. If that happens, we will enter a second copy of the tuple

* into the cache. The first copy will never be referenced again, and

* will eventually age out of the cache, so there's no functional problem.

* This case is rare enough that it's not worth expending extra cycles to

* detect.

*/

relation = heap_open(cache->cc_reloid, AccessShareLock);

scandesc = systable_beginscan(relation,

cache->cc_indexoid,

IndexScanOK(cache, cur_skey),

SnapshotNow,

cache->cc_nkeys,

cur_skey);

ct = NULL;

while (HeapTupleIsValid(ntp = systable_getnext(scandesc)))

{

ct = CatalogCacheCreateEntry(cache, ntp,

hashValue, hashIndex,

false);

/* immediately set the refcount to 1 */

ResourceOwnerEnlargeCatCacheRefs(CurrentResourceOwner);

ct->refcount++;

ResourceOwnerRememberCatCacheRef(CurrentResourceOwner, &ct->tuple);

break; /* assume only one match */

}

systable_endscan(scandesc);

heap_close(relation, AccessShareLock);

/*

* If tuple was not found, we need to build a negative cache entry

* containing a fake tuple. The fake tuple has the correct key columns,

* but nulls everywhere else.

*

* In bootstrap mode, we don't build negative entries, because the cache

* invalidation mechanism isn't alive and can't clear them if the tuple

* gets created later. (Bootstrap doesn't do UPDATEs, so it doesn't need

* cache inval for that.)

*/

if (ct == NULL)

{

if (IsBootstrapProcessingMode())

return NULL;

ntp = build_dummy_tuple(cache, cache->cc_nkeys, cur_skey);

ct = CatalogCacheCreateEntry(cache, ntp,

hashValue, hashIndex,

true);

heap_freetuple(ntp);

CACHE4_elog(DEBUG2, "SearchCatCache(%s): Contains %d/%d tuples",

cache->cc_relname, cache->cc_ntup, CacheHdr->ch_ntup);

CACHE3_elog(DEBUG2, "SearchCatCache(%s): put neg entry in bucket %d",

cache->cc_relname, hashIndex);

/*

* We are not returning the negative entry to the caller, so leave its

* refcount zero.

*/

return NULL;

}

CACHE4_elog(DEBUG2, "SearchCatCache(%s): Contains %d/%d tuples",

cache->cc_relname, cache->cc_ntup, CacheHdr->ch_ntup);

CACHE3_elog(DEBUG2, "SearchCatCache(%s): put in bucket %d",

cache->cc_relname, hashIndex);

#ifdef CATCACHE_STATS

cache->cc_newloads++;

#endif

return &ct->tuple;

}SearchCatCache的v1、v2、v3、v4几个参数都是用于查找元组的键值,分别对应于Cache中记录的元组搜索键,这4个参数分别对应CatCache数据结构中cc_key字段定义的查找键。

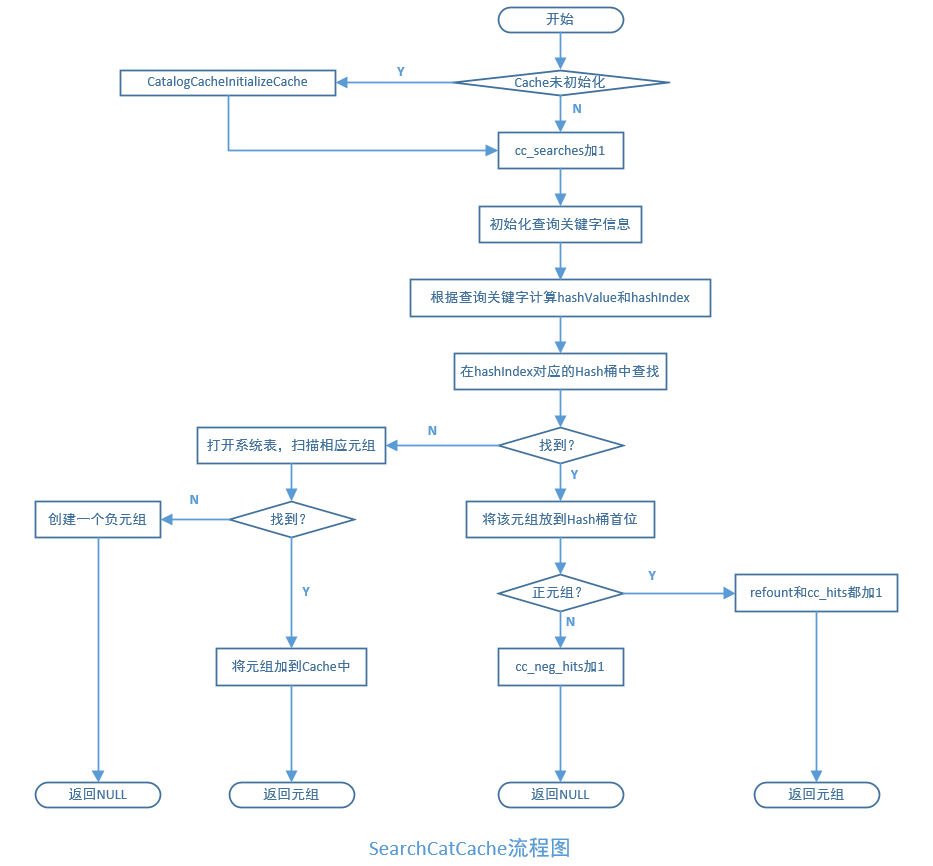

SearchCatCache需要在一个给定的CatCache中查找元组,为了确定要在哪个CatCache中进行查找,还需要先通过CacheHdr遍历SysCache中所有的CatCache结构体,并根据查询的系统表名或OID找到对应的CatCache。SearchCatCache在给定的CatCache中查找元组的过程如下:

(1)对所查找元组的键值进行Hash,安装Hash值得到该CatCache在cc_bucket数组中对应的Hash桶下标。

(2)遍历Hash桶链找到满足查询需求的Dlelem,并将其结构体中dle_val属性强制转换为CatCTup类型,CatCTup中的HeapTupleData就是要查找的元组头部。另外,还要将该Dlelem移到链表头部并将CatCache的cc_hits(命中计数器)加1.

(3)如果在Hash桶链中无法找到满足条件的元组,则需要进一步对物理系统表进行扫描,以确认要查找的元组的确是不存在还是没有缓存在CatCache中。如果扫描物理系统表能够找到满足条件的元组,则需要将该元组包装成Dlelem之后加入到其对应的Hash桶内链表头部。如果在物理系统表中找不到要查找的元组,则说明该元组确实不存在,此时构建一个只有键值但没有实际元组的“负元组”,并将它包装好加到Hash桶内链表的头部。

从SearchCatCache的查找过程中可以看到,由于CatCache只是一个缓存,因此即使在其中找不到某个元组也不能确定该元组是否存在于系统表中,还需要进一步扫描物理系统表来查找元组。但是,如果在CatCache中为这个不存在的元组放置一个“负元组”则可避免这些额外的开销,因为每次查找同一个不存在的元组时将会得到这个负元组,此时即可判定要查找的元组并不存在于系统表中,因而不用进一步去扫描物理系统表确认,从而造成浪费。

SearchCatCache的调用者不能修改返回的元组,并且使用完之后要调用ReleaseCatCache将其释放。SearchCatCache函数执行流程如下:

在CatCache中,部分匹配使用另外一个函数SearchCatCacheList,该函数产生一个CatCList结构,其中以链表的方式存放了在CatCache中找到的元组。CatList的tuple字段记录的是个负元组,他仅仅用来存放该CatCList所用到的键值,没有其他用途。CatCList中所包含的元组实际通过members字段表示的变长数据来记录,该数组的实际长度由n_members字段记录。

SearchCatCacheList函数也会先计算查找键的Hash值,不过该函数首先会在CatCache的cc_lists字段中记录CatCList链表中查找是否缓存了该查找键的结果,该查找过程将使用CatCList中tuple字段指向的元组与查找键进行Hash值比较。如果能够找到匹配该Hash值得CatCList,则cc_lhits加1并将该CatCList移到cc_lists所指向链表的头部,然后返回找到的CatCList。如果在CatCache中找不到CatCList,则扫描物理系统表并构建相应的CatCList并将它加入到cc_lists所指向的链表头部。同样,SearchCatCacheList的调用者不能修改返回的CatCList对象或者里面的元组,并且使用完之后要调用ReleaseCatCList将其释放。

三,RelCache

RelationData数据结构如下:

typedef struct RelationData

{

RelFileNode rd_node; /* relation physical identifier */

/* use "struct" here to avoid needing to include smgr.h: */

struct SMgrRelationData *rd_smgr; /* cached file handle, or NULL */

int rd_refcnt; /* reference count */

BackendId rd_backend; /* owning backend id, if temporary relation */

bool rd_isnailed; /* rel is nailed in cache */

bool rd_isvalid; /* relcache entry is valid */

char rd_indexvalid; /* state of rd_indexlist: 0 = not valid, 1 =

* valid, 2 = temporarily forced */

bool rd_islocaltemp; /* rel is a temp rel of this session */

/*

* rd_createSubid is the ID of the highest subtransaction the rel has

* survived into; or zero if the rel was not created in the current top

* transaction. This should be relied on only for optimization purposes;

* it is possible for new-ness to be "forgotten" (eg, after CLUSTER).

* Likewise, rd_newRelfilenodeSubid is the ID of the highest

* subtransaction the relfilenode change has survived into, or zero if not

* changed in the current transaction (or we have forgotten changing it).

*/

SubTransactionId rd_createSubid; /* rel was created in current xact */

SubTransactionId rd_newRelfilenodeSubid; /* new relfilenode assigned in

* current xact */

Form_pg_class rd_rel; /* RELATION tuple */

TupleDesc rd_att; /* tuple descriptor */

Oid rd_id; /* relation's object id */

List *rd_indexlist; /* list of OIDs of indexes on relation */

Bitmapset *rd_indexattr; /* identifies columns used in indexes */

Oid rd_oidindex; /* OID of unique index on OID, if any */

LockInfoData rd_lockInfo; /* lock mgr's info for locking relation */

RuleLock *rd_rules; /* rewrite rules */

MemoryContext rd_rulescxt; /* private memory cxt for rd_rules, if any */

TriggerDesc *trigdesc; /* Trigger info, or NULL if rel has none */

/*

* rd_options is set whenever rd_rel is loaded into the relcache entry.

* Note that you can NOT look into rd_rel for this data. NULL means "use

* defaults".

*/

bytea *rd_options; /* parsed pg_class.reloptions */

/* These are non-NULL only for an index relation: */

Form_pg_index rd_index; /* pg_index tuple describing this index */

/* use "struct" here to avoid needing to include htup.h: */

struct HeapTupleData *rd_indextuple; /* all of pg_index tuple */

Form_pg_am rd_am; /* pg_am tuple for index's AM */

/*

* index access support info (used only for an index relation)

*

* Note: only default support procs for each opclass are cached, namely

* those with lefttype and righttype equal to the opclass's opcintype. The

* arrays are indexed by support function number, which is a sufficient

* identifier given that restriction.

*

* Note: rd_amcache is available for index AMs to cache private data about

* an index. This must be just a cache since it may get reset at any time

* (in particular, it will get reset by a relcache inval message for the

* index). If used, it must point to a single memory chunk palloc'd in

* rd_indexcxt. A relcache reset will include freeing that chunk and

* setting rd_amcache = NULL.

*/

MemoryContext rd_indexcxt; /* private memory cxt for this stuff */

RelationAmInfo *rd_aminfo; /* lookup info for funcs found in pg_am */

Oid *rd_opfamily; /* OIDs of op families for each index col */

Oid *rd_opcintype; /* OIDs of opclass declared input data types */

RegProcedure *rd_support; /* OIDs of support procedures */

FmgrInfo *rd_supportinfo; /* lookup info for support procedures */

int16 *rd_indoption; /* per-column AM-specific flags */

List *rd_indexprs; /* index expression trees, if any */

List *rd_indpred; /* index predicate tree, if any */

Oid *rd_exclops; /* OIDs of exclusion operators, if any */

Oid *rd_exclprocs; /* OIDs of exclusion ops' procs, if any */

uint16 *rd_exclstrats; /* exclusion ops' strategy numbers, if any */

void *rd_amcache; /* available for use by index AM */

Oid *rd_indcollation; /* OIDs of index collations */

/*

* Hack for CLUSTER, rewriting ALTER TABLE, etc: when writing a new

* version of a table, we need to make any toast pointers inserted into it

* have the existing toast table's OID, not the OID of the transient toast

* table. If rd_toastoid isn't InvalidOid, it is the OID to place in

* toast pointers inserted into this rel. (Note it's set on the new

* version of the main heap, not the toast table itself.) This also

* causes toast_save_datum() to try to preserve toast value OIDs.

*/

Oid rd_toastoid; /* Real TOAST table's OID, or InvalidOid */

/* use "struct" here to avoid needing to include pgstat.h: */

struct PgStat_TableStatus *pgstat_info; /* statistics collection area */

} RelationData;

typedef struct RelationData *Relation;对RelCache的管理比SysCache简单很多,原因在于大多数时候RelCache中存储的RelationData的结构是不变的,因此PostgreSQL仅用一个Hash表来维持这样一个结构。对RelCache的查找、插入、删除、修改等操作也非常简单。当需要打开一个表时,首先在RelCache中寻找该表的RelationData结构,如果没有找到,则创建该结构并加入到RelCache中。

1. RelCache初始化

RelCache的初始化也在InitPostgres中,分为三个阶段:

- RelationCacheInitialize:这个阶段主要是调用hash_create函数为进程创建一个Hash表RelationIdCache(HTAB*类型),键为表的OID,Hash函数为oid_hash。

- RelationCacheInitializePhase2:这个阶段主要是读入一些共享的系统表信息,至少包含pg_database表和认证相关的系统表(pg_authid、pg_auth_members)的信息。

- RelationCacheInitializePhase3:这个阶段我们已经可以从系统表读取信息进行后续的一些初始化。这个阶段会确保pg_class、pg_attribute、pg_proc、pg_type这四个系统表记起相关索引的信息被加入到RelCache中。在PostgreSQL中,使用一个文件pg_internal.init文件来记录系统表的RelationData结构体,若该文件未损坏,则直接将其内容读入到RelCache中。否则,分别建立pg_class、pg_attribute、pg_proc、pg_type及其索引的RelationData结构,加入到RelCache上的Hash表中,并重写pg_internal.init文件。

2. RelCache的操作

RelCache的操作实质就是对Hash表的操作:

(1)插入:当打开新的表时,要把它的RelationData加到RelCache中。该操作通过宏RelationCacheInsert来实现:首先,根据系统表OID在Hash表中找到对应的位置,调用函数hash_search,指定查询模式为HASH_ENTER,该模式下若发现OID对应的Hash桶已经存在,则返回其指针;否则创建一个空的Hash桶,然后返回其指针。然后将返回的指针强制转换为RelIdCacheEnt,然后把打开表的RelationData赋值给reldesc字段。

typedef struct relidcacheent

{

Oid reloid;

Relation reldesc;

} RelIdCacheEnt;(2)查找:查找Hash表通过宏RelationIdCacheLookup实现:调用函数hash_search,指定查询模式为HASH_FIND,若找到ID对应的RelIdCacheEnt,则将其reldesc字段赋值给RELATION;否则,设置RELATION为NULL。

(3)删除:从Hash表中删除元素通过宏RelationCacheDelete实现:调用hash_search,指定查询模式为HASH_REVOKE,在该模式下,若找到对应的Hash桶,则将其删除;否则,返回NULL。

四,Cache同步

在PostgreSQL中,每一个进程都有属于自己的Cache。换句话说,同一个系统表在不同的进程中都有对应的Cache来缓存它的元组(对于RelCache来说缓存的是一个RelationData结构)。同一个系统表的元组可能同时被多个进程的Cache缓存,当其中某个Cache中的一个元组被删除或更新时,需要通知其他进程对其Cache进行同步。在PostgreSQL的实现中,会记录下已被删除的无效元组,并通过SI Message方式(即共享消息队列)在进程之间传递这一消息。收到无效消息的进程将同步的把无效元组(或RelationData结构)从自己的Cache中删除。

PostgreSQL 9.2.4版本支持五种无效消息传递方式:

typedef union

{

int8 id; /* type field --- must be first */

SharedInvalCatcacheMsg cc;

SharedInvalCatalogMsg cat;

SharedInvalRelcacheMsg rc;

SharedInvalSmgrMsg sm;

SharedInvalRelmapMsg rm;

} SharedInvalidationMessage;其中使SysCache中元组无效的是SharedInvalCatcacheMsg消息,使RelCache中RelationData结构无效的是SharedInvalRelcacheMsg消息。

其中id为0或正数表示CatCache(同时也表示产生该无效消息的CatCache的编号),不同的负值代表了不同的消息类型:

typedef struct

{

int8 id; /* cache ID --- must be first */

Oid dbId; /* database ID, or 0 if a shared relation */

uint32 hashValue; /* hash value of key for this catcache */

} SharedInvalCatcacheMsg;

#define SHAREDINVALCATALOG_ID (-1)

typedef struct

{

int8 id; /* type field --- must be first */

Oid dbId; /* database ID, or 0 if a shared catalog */

Oid catId; /* ID of catalog whose contents are invalid */

} SharedInvalCatalogMsg;

#define SHAREDINVALRELCACHE_ID (-2)

typedef struct

{

int8 id; /* type field --- must be first */

Oid dbId; /* database ID, or 0 if a shared relation */

Oid relId; /* relation ID */

} SharedInvalRelcacheMsg;

#define SHAREDINVALSMGR_ID (-3)

typedef struct

{

/* note: field layout chosen to pack into 16 bytes */

int8 id; /* type field --- must be first */

int8 backend_hi; /* high bits of backend ID, if temprel */

uint16 backend_lo; /* low bits of backend ID, if temprel */

RelFileNode rnode; /* spcNode, dbNode, relNode */

} SharedInvalSmgrMsg;

#define SHAREDINVALRELMAP_ID (-4)

typedef struct

{

int8 id; /* type field --- must be first */

Oid dbId; /* database ID, or 0 for shared catalogs */

} SharedInvalRelmapMsg;各个进程通过调用函数CacheInvalidateXXX系列函数对无效消息进行注册(在Inval.c文件中)。

本文参考总结自《PostgreSQL数据库内核分析》。

NYC's Blog

NYC's Blog

评论已关闭