之前有市场反馈Filebeat导致文件删除后磁盘空间无法释放,于是分析并重现了一下,下面记录一下。

熟悉Linux的人都知道,文件删除实际是将其inode链接数减1,当inode链接数变为0,且没有其它进程访问该文件时,操作系统就会真正删除文件,回收资源。“Filebeat导致文件删除后磁盘空间无法释放”的原因就是因为Filebeat打开着文件,所以系统无法真正删除文件,释放磁盘空间。而这个在官方文档里面其实是有说明的,且给出了解决方案:

Filebeat keeps open file handlers of deleted files for a long time?

In the default behaviour, Filebeat opens the files and keeps them open until it reaches the end of them. In situations when the configured output is blocked (e.g. Elasticsearch or Logstash is unavailable) for a long time, this can cause Filebeat to keep file handlers to files that were deleted from the file system in the mean time. As long as Filebeat keeps the deleted files open, the operating system doesn’t free up the space on disk, which can lead to increase disk utilisation or even out of disk situations.

To mitigate this issue, you can set the close_timeout setting to 5m. This will ensure every file handler is closed once every 5 minutes, regardless of whether it reached EOF or not. Note that this option can lead to data loss if the file is deleted before Filebeat reaches the end of the file.

第一部分说明了问题原因:当output也就是后端阻塞时,filebeat就会一直打开着文件。这样即使我们使用rm删除了文件,系统也没法真正删除文件并释放磁盘空间,这个问题可以很容易的复现一下。

问题复现

准备一个采集配置:

# test-delete.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /tmp/test-logs/*.log

close_inactive: 30s # 默认5m

close_removed: true # 默认就是开启的

output.elasticsearch:

enabled: true

hosts: ["127.0.0.1:9200"]

queue.mem:

events: 32

flush.min_events: 32注意:

- 要模拟后端阻塞,所以配置一个不存在的后端即可,这里使用的是ES,换成其它Filebeat支持的可阻塞后端即可(比如Kafka、Logstash),当然不论配哪个,都不需要真正去部署;

- 为了方便复现,将Filebeat内部的队列设置成最小值32;

- 准备一个日志文件,里面的日志行数最少有53行(后面解释原因)。

比如我在/tmp/test-logs/目录下创建了一个test-1.log,里面有53行日志:

line1

line2

...

line53然后运行Filebeat:

➜ filebeat-6.8.8-linux-x86_64 ./filebeat -e -c delete-test.yml

2021-02-27T10:40:15.452+0800 INFO instance/beat.go:611 Home path: [/root/software/filebeat-6.8.8-linux-x86_64] Config path: [/root/software/filebeat-6.8.8-linux-x86_64] Data path: [/root/software/filebeat-6.8.8-linux-x86_64/data] Logs path: [/root/software/filebeat-6.8.8-linux-x86_64/logs]

# 省略中间日志

2021-02-27T10:40:15.470+0800 INFO registrar/registrar.go:141 States Loaded from registrar: 0

2021-02-27T10:40:15.470+0800 INFO crawler/crawler.go:72 Loading Inputs: 1

2021-02-27T10:40:15.471+0800 INFO log/input.go:148 Configured paths: [/tmp/test-logs/*.log]

2021-02-27T10:40:15.471+0800 INFO input/input.go:114 Starting input of type: log; ID: 13166799806221673713

2021-02-27T10:40:15.471+0800 INFO crawler/crawler.go:106 Loading and starting Inputs completed. Enabled inputs: 1

2021-02-27T10:40:15.472+0800 INFO log/harvester.go:255 Harvester started for file: /tmp/test-logs/test-1.log

2021-02-27T10:40:15.474+0800 INFO pipeline/output.go:95 Connecting to backoff(elasticsearch(http://127.0.0.1:9200))

2021-02-27T10:40:17.476+0800 ERROR pipeline/output.go:100 Failed to connect to backoff(elasticsearch(http://127.0.0.1:9200)): Get http://127.0.0.1:9200: dial tcp 127.0.0.1:9200: connect: connection refused

2021-02-27T10:40:17.476+0800 INFO pipeline/output.go:93 Attempting to reconnect to backoff(elasticsearch(http://127.0.0.1:9200)) with 1 reconnect attempt(s)

# 省略重试过程

2021-02-27T10:40:37.847+0800 ERROR pipeline/output.go:100 Failed to connect to backoff(elasticsearch(http://127.0.0.1:9200)): Get http://127.0.0.1:9200: dial tcp 127.0.0.1:9200: connect: connection refused

2021-02-27T10:40:37.847+0800 INFO pipeline/output.go:93 Attempting to reconnect to backoff(elasticsearch(http://127.0.0.1:9200)) with 4 reconnect attempt(s)

# 注意看里面的"harvester":{"open_files":1,"running":1,"started":1}}

2021-02-27T10:40:45.472+0800 INFO [monitoring] log/log.go:144 Non-zero metrics in the last 30s {"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":70,"time":{"ms":73}},"total":{"ticks":140,"time":{"ms":151},"value":0},"user":{"ticks":70,"time":{"ms":78}}},"handles":{"limit":{"hard":100000,"soft":100000},"open":7},"info":{"ephemeral_id":"3f417841-c5cb-409b-ba88-16e577a0f07f","uptime":{"ms":30052}},"memstats":{"gc_next":4194304,"memory_alloc":2242696,"memory_total":5271520,"rss":27172864}},"filebeat":{"events":{"active":53,"added":54,"done":1},"harvester":{"open_files":1,"running":1,"started":1}},"libbeat":{"config":{"module":{"running":0}},"output":{"type":"elasticsearch"},"pipeline":{"clients":1,"events":{"active":53,"filtered":1,"published":52,"retry":128,"total":54}}},"registrar":{"states":{"current":1,"update":1},"writes":{"success":2,"total":2}},"system":{"cpu":{"cores":8},"load":{"1":0.57,"15":1.18,"5":0.85,"norm":{"1":0.0712,"15":0.1475,"5":0.1063}}}}}}可以看到后端不通,而且后面的monitor部分显示“"open_files":1”,也就是文件一直被打开着。此时,删除正在被采集的文件/tmp/test-logs/test-1.log,然后观察一下:

# 删除文件

➜ test-logs rm test-1.log

# ll查看文件,的确已经不存在了

➜ test-logs ll

total 0

# 使用lsof +L1查看

➜ test-logs lsof +L1

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NLINK NODE NAME

filebeat 18777 root 5r REG 253,0 362 0 1115687240 /tmp/test-logs/test-1.log (deleted)lsof +L1命令可以查看已经被删除但还被进程打开的文件。可以看到用rm删除之后,文件依旧被Filebeat占用,所以导致操作系统无法真正释放资源。此时,停掉Filebeat进程,再用lsof查看,就会发现已经没有了,文件已经被真正删除了。这样这个问题就复现了。

这部分最后解答一下之前为什么要求测试日志里面必须至少有53行日志?这个得结合Filebeat的原理和代码进行说明,后面计划单独写一篇Filebeat源码解析的文章,这里只简单解答这个问题。Filebeat的采集过程如下图:

- input:扫描要采集的文件,每个文件打开一个harvester(即一个gorouting);

- harvester:读取文件内容,以事件(event)的形式发送到spooler;

- spooler:即filebeat内部的队列,有内存和文件两种队列,默认为内存队列;

- publisher:负责从spooler中读取事件发送到后端(output),发送成功后,会给registrar发送ack;

- registrar:收到事件成功发送的ack之后,就将状态(采集的文件、及位置等信息)记录到registry文件中。

在上述过程中,不同的功能是由不用的goroutine实现的,这些goroutine之间就是通过go的channel通信的。上面画的是一个非常精简的流程,实际过程会比这个复杂一些:

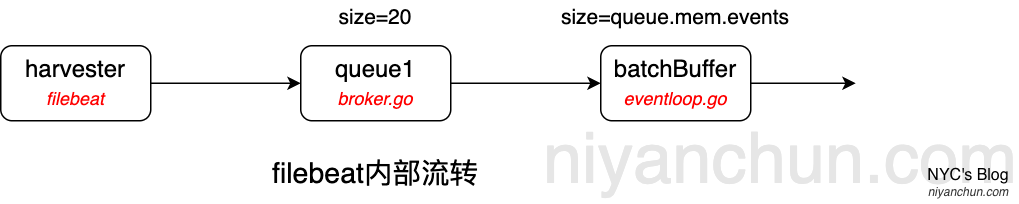

实际上harvester是将数据发送到producer的队列(channel),这个队列的大小是写死的20(libbeat/publisher/queue/memqueue/broker.go: chanSize := 20,这是个channel),然后数据又会通过EventLoop转移到一个batchBuffer,这个缓存的大小才是在配置文件里面配的queue.mem.events。其实后面还有个consumer又将数据搬到另外一个output队列,最后由有一个netClientWorker负责将数据发送出去,这些留到下篇源码解析文章讨论。现在由于后端不通,所以数据会一直积攒在batchBuffer里面。这样harvester能够发送的总数据大小就是20+queue.meme.events=20+32=52了。这就是53的由来,整个链路最大只能容纳52个事件,第53个事件来的时候,队列已经满了,harvester发送的时候就会阻塞在broker的channel上面。queue.meme.events的默认值是4096,如果不修改的话,那就需要多准备一些日志。

问题解决

上面官方给的解决方法是close_timeout参数。在讨论这个参数之前,我们先想一下close_inactive和close_removed两个参数能不能解决问题:

close_inactive:当文件在该参数设置的时间段内没有更新的话就关闭harvester,当然同时也会关闭文件;close_removed:如果文件在采集阶段被删除了,就关闭harvester,当然同时也会关闭文件;

从这两个参数的功能来看,似乎可以避免上面的问题。但这两个配置在上面复现时也配置了,结果是并没有解决问题,为什么呢?这个就得从Filebeat的代码层面来解答了。看下harvester的部分代码:

// Run start the harvester and reads files line by line and sends events to the defined output

func (h *Harvester) Run() error {

// 删除部分代码

// Closes reader after timeout or when done channel is closed

// This routine is also responsible to properly stop the reader

go func(source string) {

closeTimeout := make(<-chan time.Time)

// starts close_timeout timer

if h.config.CloseTimeout > 0 {

closeTimeout = time.After(h.config.CloseTimeout)

}

select {

// Applies when timeout is reached

case <-closeTimeout:

logp.Info("Closing harvester because close_timeout was reached: %s", source)

// Required when reader loop returns and reader finished

case <-h.done:

}

h.stop()

h.log.Close()

}(h.state.Source)

logp.Info("Harvester started for file: %s", h.state.Source)

for {

select {

case <-h.done:

return nil

default:

}

message, err := h.reader.Next()

if err != nil {

switch err {

case ErrFileTruncate:

logp.Info("File was truncated. Begin reading file from offset 0: %s", h.state.Source)

h.state.Offset = 0

filesTruncated.Add(1)

case ErrRemoved:

logp.Info("File was removed: %s. Closing because close_removed is enabled.", h.state.Source)

case ErrRenamed:

logp.Info("File was renamed: %s. Closing because close_renamed is enabled.", h.state.Source)

case ErrClosed:

logp.Info("Reader was closed: %s. Closing.", h.state.Source)

case io.EOF:

logp.Info("End of file reached: %s. Closing because close_eof is enabled.", h.state.Source)

case ErrInactive:

logp.Info("File is inactive: %s. Closing because close_inactive of %v reached.", h.state.Source, h.config.CloseInactive)

case reader.ErrLineUnparsable:

logp.Info("Skipping unparsable line in file: %v", h.state.Source)

//line unparsable, go to next line

continue

default:

logp.Err("Read line error: %v; File: %v", err, h.state.Source)

}

return nil

}

// 删除部分代码

// Always send event to update state, also if lines was skipped

// Stop harvester in case of an error

if !h.sendEvent(data, forwarder) {

return nil

}

// Update state of harvester as successfully sent

h.state = state

}

}分析一下这部分代码(为了突出重点,把不相关的代码删掉了):

- 前面启动了一个goroutine,如果定义了

close_timeout,就会启动一个定时器,当定时器触发后,就会关闭当前goroutine,这也是官方文档中提到的解决方式可行的原因; - for循环里面的

h.sendEvent(data, forwarder)就是向后边队列(实际是大小为20的channel)发送数据的; - for循环里面的

h.reader.Next()是读取文件的。

从这个逻辑就可以解释为什么close_inactive和close_removed不能解决问题了:因为它们两个配置的逻辑是在for循环里面的switch部分的。当队列满了以后,当前harvester会阻塞在h.reader.Next()发送这里,根本无法回到前面的switch部分。不过反过来大家也可以实验一下,如果你的日志行数少于20+queue.mem.events,那在队列满之前就会走到switch部分,这样harvester就会关闭,同时也会关闭文件,就不会产生上面问题了。

而close_timeout能解决问题就是因为它的功能是在另外一个独立的goroutine中实现的,所以即使采集的goroutine阻塞在channel上了,它也可以从“外部”将阻塞的goroutine停掉。但这个参数并非完美无缺,它是有弊端的,主要有两个潜在的问题:

- 可能造成数据丢失。因为文件没采集完,harvester就被强制关闭了。特别是当

close_timeout大于等于ignore_older时,大概率会丢失数据。因为当close_timeout时间到了的时候,ignore_older的时间也到了,此时如果该文件数据没有采集完,且后面该文件没有再更新的话,这个文件里面剩余的数据就丢了,永远不会被采集了。所以如果设置这个参数,一定要保证它的值不大于ignore_older。 - 如果使用了multiline,该合并成一个event的数据可能会被拆分成多个event。如果在multiline处理阶段超时时间到了的话,harvester就会将已经合并的部分作为一个event发送。当下次这个文件被采集时,剩下的部分又会被当成另外一个event发送。

总结

Filebeat有非常多的参数可以配置,但这也是一把双刃剑,如果你使用的好,它就是一个非常灵活,高度可配置的工具;使用的不好,就可能会丢数据,或者占用非常多的资源,你还不知道为什么。比如本文讨论的这个占用文件导致文件无法被删除的场景,虽然可能比较少,但的确可能会出现。当问题出现的时候,你必须明白为什么,以及使用close_timeout的时候也要知道他可能导致的问题,以及如何通过联动多个相关配置项来尽量去避免它的弊端。

NYC's Blog

NYC's Blog

评论已关闭